Many studies are increasingly focusing on frame interpolation, which synthesizes intermediate pictures between a pair of input frames. The refresh rate can be increased, or slow-motion videos can be created using temporal up-sampling.

There’s been a new application popping up recently. Due to the ease with which digital photography, individuals will often shoot several shots in rapid succession to find the best one, as they can now produce several images in a matter of seconds. Interpolating between these “near duplicates” reveals scene (and some camera) motion, frequently offering a more appealing sense of the event than any original photographs and presenting an interesting potential. However, conventional interpolation approaches have a significant barrier when dealing with still images because the time gap between near-duplicates can be a second or more, with commensurately large scene motion.

Recent approaches have shown promising results for the challenging problem of frame interpolation between consecutive video frames, which often exhibit minor motion. However, interpolation for big scene motion, which typically occurs in near duplicates, has received little attention. Although the study tried to solve the big-motion issue by training on a very extreme-motion dataset, its performance on small-motion tests was disappointing.

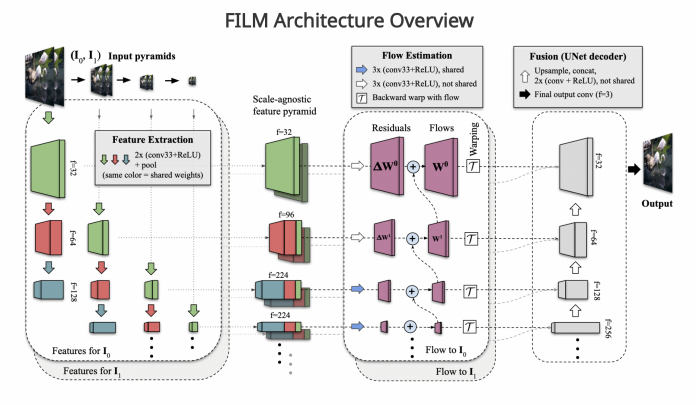

A recent study from Google and the University of Washington proposes the Frame Interpolation for Large Motion (FILM) algorithm for interpolating large motion frames, focusing on near-duplicate image interpolation. FILM is a straightforward, unified, and one-stage model that can be trained with only standard frames and does not necessitate the use of optical flow or depth prior networks or their limited pre-training data. It comprises a “scale-agnostic” bi-directional motion estimator that can learn from normal-motion frames but still generalize well to high-motion frames and a “feature pyramid” that distributes importance across scales. They modify a shared-weights multi-scale feature extractor and present a scale-insensitive bi-directional motion estimator that can effectively deal with tiny and large motions using only standard training frames.

Based on the assumption that fine-grained motion should be analogous to coarse-grained motion, the method increases the number of pixels (as the finer scale is higher resolution) accessible for large-motion supervision.

The researchers noticed that the interpolated frames frequently look shaky whenever state-of-the-art algorithms perform well on benchmarks, especially in large disoccluded regions that result from major camera movements. To address this issue, they optimize their models using the Gram matrix loss, which is consistent with the auto-correlation of the high-level VGG features and yields striking improvements in image sharpness and realism.

In addition to relying on limited data for pre-training extra optical flow, depth, or other previous networks, the training complexity of modern interpolation techniques is a significant limitation. Lack of information is especially problematic for major changes. This study also contributes a uniform architecture for frame interpolation that can be trained using only standard frame triplets, which greatly simplifies the training procedure.

Extensive experimental results demonstrate that FILM delivers high-quality, temporally smooth videos, outperforming competing approaches for large and tiny motions.

Please Don't Forget To Join Our ML SubredditRELATED:

Social Plugin